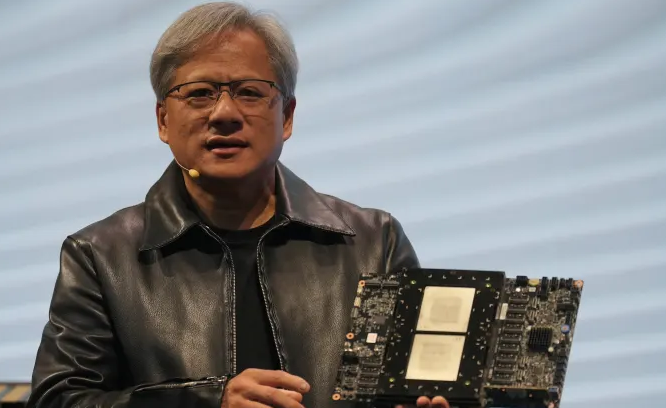

Nvidia unveils H200, its newest high-end chip for training AI models

Nvidia on Monday unveiled the H200, a graphics processing unit designed for training and deploying the kinds of artificial intelligence models that are powering the generative AI boom.

The new GPU is an upgrade from the H100, the chip OpenAI used to train its most advanced large language model, GPT-4. Big companies, startups and government agencies are all vying for a limited supply of the chips.

H100 chips cost between $25,000 and $40,000, according to an estimate from Raymond James, and thousands of them working together are needed to create the biggest models in a process called “training.”

Excitement over Nvidia’s AI GPUs has supercharged the company’s stock, which is up more than 230% so far in 2023. Nvidia expects around $16 billion of revenue for its fiscal third quarter, up 170% from a year ago.

The key improvement with the H200 is that it includes 141GB of next-generation “HBM3” memory that will help the chip perform “inference,” or using a large model after it’s trained to generate text, images or predictions.

Nvidia said the H200 will generate output nearly twice as fast as the H100. That’s based on a test using Meta’s

Llama 2 LLM.

The H200, which is expected to ship in the second quarter of 2024, will compete with AMD’s MI300X GPU. AMD’s chip, similar to the H200, has additional memory over its predecessors, which helps fit big models on the hardware to run inference.